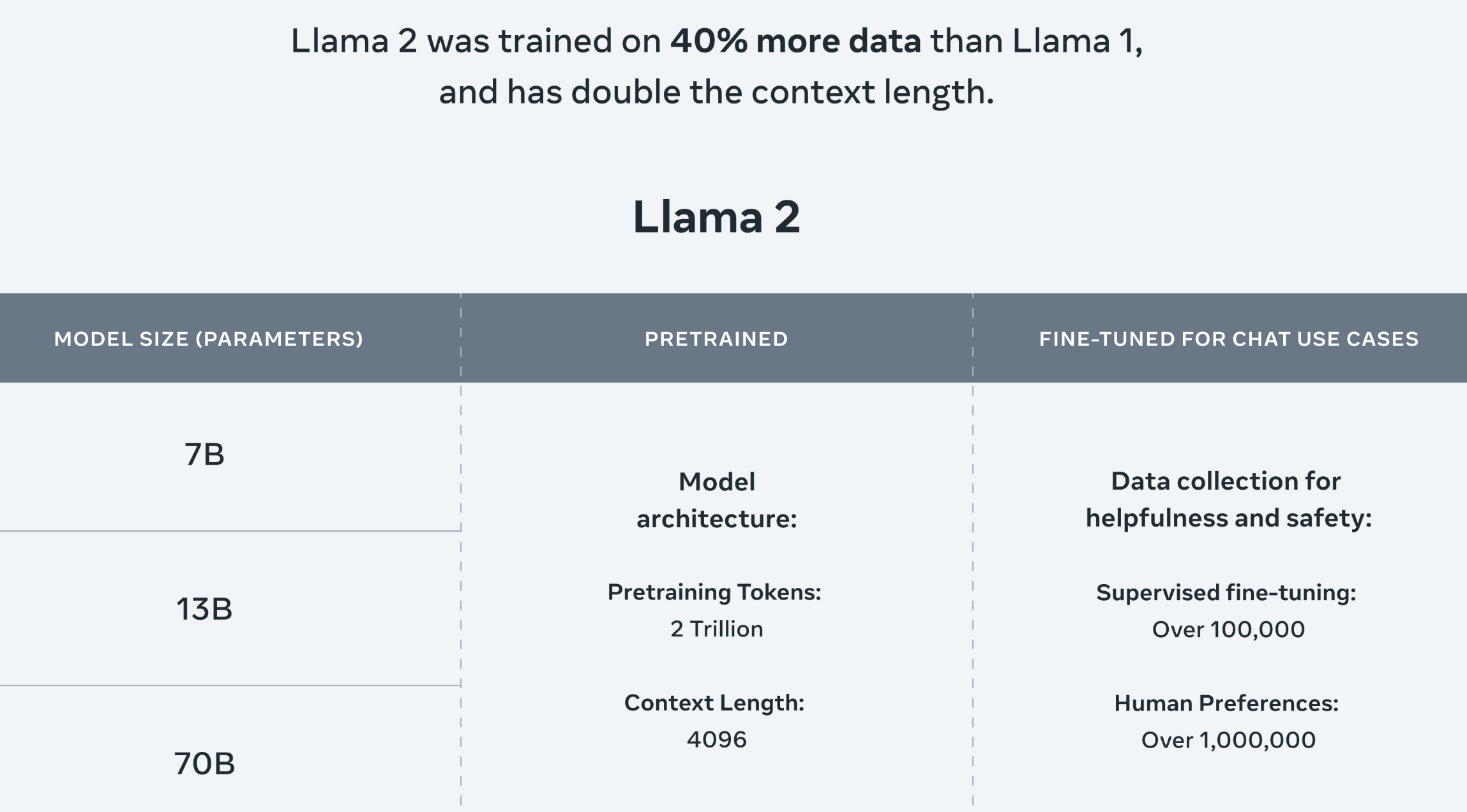

Llama 2 Community License Agreement Llama 2 Version Release Date July 18 2023 Agreement means the terms and conditions for use reproduction distribution and. Getting started with Llama 2 Create a conda environment with pytorch and additional dependencies Download the desired model from hf either using git-lfs or using the llama download script. Llama 2 models are trained on 2 trillion tokens and have double the context length of Llama 1 Llama Chat models have additionally been trained on over 1 million new human annotations. The license for the Llama LLM is very plainly not an Open Source license Meta is making some aspect of its large language model available to. The Llama 2 model comes with a license that allows the community to use reproduce distribute copy create derivative works of and make modifications to the Llama Materials..

Web Llama 2 is intended for commercial and research use in English. Web Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7. Llama 2 was pretrained on publicly available online data sources. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large. Web Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion. Web Llama 2 is a family of state-of-the-art open-access large language models released by Meta..

WEB How to Fine-Tune Llama 2 In this part we will learn about all the steps required to fine-tune the Llama 2 model with 7 billion parameters on a T4 GPU. WEB Compared to Llama 1 Llama 2 doubles context length from 2000 to 4000 and uses grouped-query attention only for 70B Llama 2 pre-trained models are trained on 2 trillion tokens. WEB Using AWS Trainium and Inferentia based instances through SageMaker can help users lower fine-tuning costs by up to 50 and lower deployment costs by 47x while. -- Fine-tuning a Large Language Model LLM comes with tons of benefits when compared to relying on proprietary foundational models such as OpenAIs GPT models. Key Concepts in LLM Fine Tuning Supervised Fine-Tuning SFT Reinforcement Learning from Human Feedback RLHF Prompt Template..

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale. . Result We have a broad range of supporters around the world who believe in our open approach to todays AI companies that have given early feedback and are. Result Llama 2 models are trained on 2 trillion tokens and have double the context length of Llama 1 Llama Chat models have additionally been trained on over 1 million. Result Llama 2 a product of Meta represents the latest advancement in open-source large language models LLMs It has been trained on a massive..

Komentar